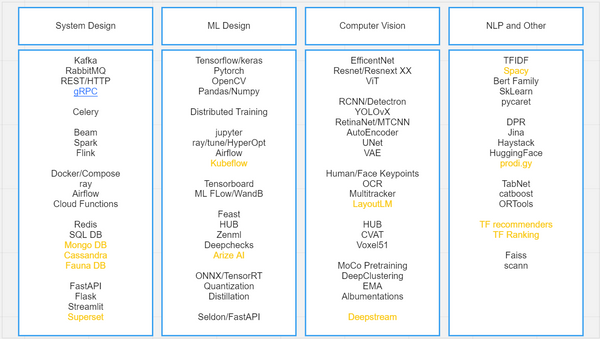

Hyperparameter Optimisation at scale

TLDR; Use Ray Tune or NNI, they provide SOTA algorithms out of box for efficient HPO.

Deep neural networks have many tunable hyperparameters which need to be selected to get maximum accuracy out of your datasets and models. It can be used to find the best neural architecture (NAS) by optimising layer choice, number of layers, and layer width, or finding the best learning algorithm by optimising learning rate, momentum, optimizer choice, data augmentation etc.

We will cover:

- When to use HPO

- How HPO works

- State-of-art HPO algorithms

- How to use - Ray Tune and NNI

When to use HPO

First, let’s cover where to not use HPO. If you have a dataset similar to pre-trained datasets and you are not hunting for last 3-4% accuracy gains, you should not use HPO. It will consume 10x more resources and time for such gains. In my experience, good authors or libraries provide good default values which are a result of both intensive HPO and domain expertise. Also, model architecture HPO should not be a primary way to optimize performance w.r.t accuracy for standard problems. There is a wider range of network architectures and sub-architectures available that trade accuracy for performance. For example, in vision classification, you can use architectures from VIT to EfficientNet to MobileNet and small, medium, large sub-architecture within. They provide 10-100x performance gain with a tradeoff of 3-10% accuracy. Optimisation techniques are the next best choice. However, HPO can also be used for the evaluation of all these choices.

But if you are an academic user, where a 1-2% gain is a SOTA decision maker or 3-4% improvement has a significant impact on business, then HPO is a really good choice. HPO provides very good results if the standard model and configurations are not yielding good results. It shines when you dwell in no-man’s land such as new algorithm development or custom model architecture design. Tuning large parameters manually is impossible with high complexity scenarios. I have used this to design custom model architecture that provides 10x performance while retaining accuracy for specific segmentation tasks.

How HPO works

HPO consist of two parts - search algorithm and scheduler. Search algorithm selects hyperparameter value for a trial from complete parameter space and scheduler decides the run duration or resource allocation to trial.

Two basic search algorithms are grid search and random search. Random search works better than grid search. It is also the best choice for embarrassingly parallel optimisation. One state-of-art search algorithm is Bayesian Optimisations. It utilizes surrogate bayesian model on search space to efficiently find optimal values. It can select value based on maximum uncertainty (exploration) or maximum gain (exploitation) from bayesian modelled search space and then improve search space modelling based on trial results. Two major drawbacks are that this algorithm in its naïve form is not parallel (next value selection is dependent on current value evaluation) and it only supports continuous values. However advanced implementations such as TPE and BOHB address these limitations.

A naive scheduler runs all trails for complete durations and evaluates them after. Successive halving or Asynchronous Successive halving (ASHA) keeps the best halves of the trials and discards half of the trials that are not good. It will continue until we have just one single configuration. It optimises resource allocation to good trials, resulting in faster training. Hyperband scheduler further improves upon this by starting new configuration trials in place of discarded trials. This increases the number of trials evaluated, resulting in better hyperparameters. ASHA or Hyperband requires that all configurations can be stopped early and validation score can be obtained.

State-of-art methods such as BOHB, combining bayesian optimisation and hyperband can lead to 5-20x speed up on HPO.

State-of-art HPO algorithms

How to use - Ray Tune

Ray Tune is a really good tool for HPO. It is simple and powerful. As part of Ray ecosystem, it is scalable to multi-GPU and distributed environments. It provides all the above and additional SOTA algorithms. You can find more details here. Microsoft NNI is also a really good choice if you are using PyTorch ecosystem. It is even better for NAS use cases. But its support for non-PyTorch frameworks is limited.

HPO with Bayesian optimisation and HyperBand scheduler can be quickly implemented in Ray Tune via the following reference:

from ray import tune

from ray.tune.search.hyperopt import HyperOptSearch

from ray.tune.search import ConcurrencyLimiter

from ray.tune.schedulers import AsuncHyperBandScheduler

from ray.air import session

# 1. Define an objective function.

def trainable(config):

#import torch/keras - import pytorch/tf/keras here if using, known issue with GPU trials

for x in range(20): # "Train" for 20 epoch.

one_epoch_training(model, config["lr"], config["a"])

accuracy = calc_accuracy(model)

session.report({"accuracy": accuracy}) # Send the score to Tune.

# 2. Define a search space.

search_space = {

"lr": tune.loguniform(1e-8, 1e-2, base=10),

"a": tune.choice([1, 2, 3]),

}

# 3. Define Search Algo and Scheduler

search_algo = HyperOptSearch()

search_algo = ConcurrencyLimiter(search_algo, max_concurrent=4) # Limit concurrent trials since BO doesn't parallelize very well

scheduler = AsuncHyperBandScheduler(metric="accuracy", mode="max", grace_period=5)

# 4. Start a Tune run that maximizes accuracy.

tune_config = tune.TuneConfig(

search_alg=search_algo,

metric="accuracy", mode="max",

num_samples= 20 # Number of trials

)

tuner = tune.Tuner(

trainable,

tune_config= tune_config,

param_space=search_space,

scheduler = scheduler

)

results = tuner.fit()

print(results.get_best_result(metric="score", mode="min").config)This should get you started on journey of optimizing hyperparameters efficiently with state-of-art algorithms. Combining with techniques like µTransfer makes it even more promising. OpenAI fine-tuned a 40 million parameter proxy GPT3 model before transferring the optimal hyperparameters to the 6.7B parameter variant. With only a 7% extra training budget for hyperparameter search, it outperformed the 13B variant. To learn more about this, these are some good references:

- Ray Tune: https://docs.ray.io/en/latest/tune/key-concepts.html

- Good blogs to take reference: Blog 1 , Blog 2

- FLAML: https://github.com/microsoft/FLAML

- Fabolas: https://arxiv.org/abs/1605.07079

- PBT: https://www.deepmind.com/blog/population-based-training-of-neural-networks and https://www.deepmind.com/publications/faster-improvement-rate-population-based-training

- µTransfer: https://decentdescent.org/tp5.html